Abstract

Training generalist agents is difficult across several axes, requiring us to deal with high dimensional inputs (space), long horizons (time), and multiple and new tasks. Recent advances with architectures have allowed for improved scaling along one or two of these dimensions, but are still prohibitive computationally. In this paper, we propose to address all three axes by leveraging Language to Control Diffusion models as a hierarchical planner conditioned on language lcd.

We effectively and efficiently scale diffusion models for planning in extended temporal, state, and task dimensions to tackle long horizon control problems conditioned on natural language instructions. We compare LCD with other state-of-the-art models on the CALVIN language robotics benchmark and find that LCD outperforms other SOTA methods in multi task success rates while dramatically improving computational efficiency with a single task success rate (SR) of 88.7% against the previous best of 82.7%.

We show that LCD can successfully leverage the unique strength of diffusion models to produce coherent long range plans while addressing their weakness at generating low-level details and contro

Efficient Training & Inference

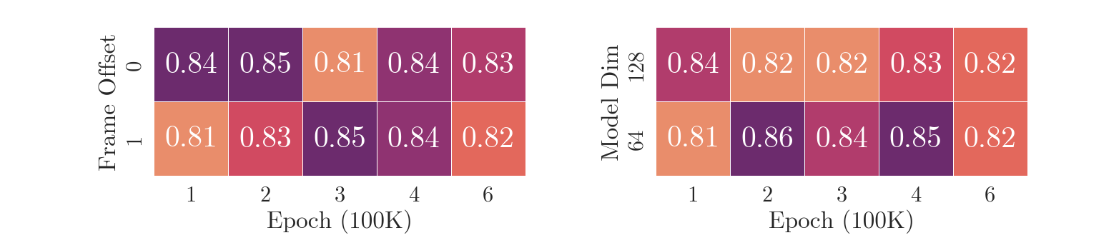

Robust Against Hyperparameters